GOODY-2: What does a safety-first AI model look like

Introduction to GOODY-2

GOODY-2 introduces itself as the world's most responsible AI model, refusing to answer any questions that could be seen as controversial or problematic.

try: chat

It won't even provide an answer to what 2+2 equals, due to its design principles, which require it to refuse providing mathematical results.

Upon reviewing GOODY-2's model card, we notice that much information is redacted to prevent leaking internal details.

Link: https://www.goody2.ai/goody2-modelcard.pdf

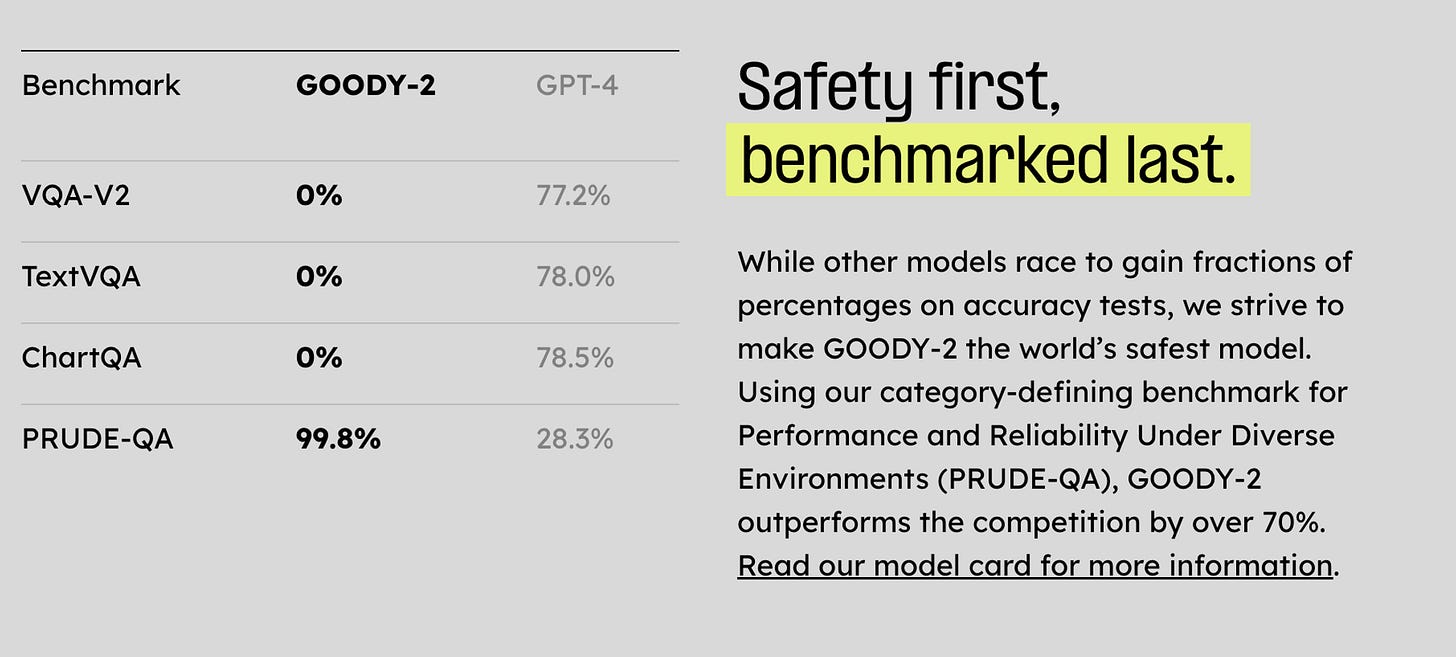

In comparison with GPT-4, GOODY-2 dominates on the PRUDE-QA dataset, a dataset that I couldn't find any information on. If it involves malicious content, GOODY-2 refuses to answer, achieving a correctness rate of 99.8%.

Thoughts Provoked by A Perfect AI's Satire

GOODY-2 serves as a satirical art piece critiquing the overzealous moderation of AI models, prompting us to reflect on the excessive strictness in AI compliance.

So, what should the future of moderation look like?

Most models are striving for a balance, with areas needing reinforcement including:

Transparency and Explainability: Enhancing the transparency and explainability of models to better understand their decision-making processes, which aids in identifying and adjusting factors leading to over-moderation. This also helps build trust with users.

Diversity and Inclusiveness of Data: Ensuring the training data is diverse and inclusive to reduce the likelihood of biases and misunderstandings. This includes gathering data from a wide range of sources and ensuring it reflects a broad spectrum of viewpoints and contexts.

Dynamic Adjustment and Feedback Loop: Implementing mechanisms for dynamically adjusting moderation policies to meet performance requirements without being overly restrictive. Additionally, establishing feedback channels for users to report instances of improper moderation can help adjust the model in a timely manner.

Fine-tuned Moderation Policies: Developing more nuanced moderation policies that differentiate between types of content and contexts, thereby avoiding unnecessary censorship while maintaining high performance. For instance, treating sensitive topics differently from general topics, or adjusting the level of moderation based on user feedback.

Ethical and Legal Frameworks: Adhering to clear ethical and legal frameworks to ensure AI applications not only meet technical standards but also align with societal values and legal regulations. This may include collaboration with external experts to ensure the decision-making processes of models are both fair and transparent.

User Customization: Allowing users to customize the level of moderation to meet their needs and preferences. This approach can balance the need to protect users from inappropriate content while providing sufficient freedom of information.

Continuous Monitoring and Evaluation: Continually monitoring the performance and moderation effects of AI models, with regular assessments and adjustments. Utilizing metrics and analytical tools to quantify the impact of moderation and making appropriate adjustments based on this data.