[paper] Here Comes The AI Worm: Unleashing Zero-click Worms that Target GenAI-Powered Applications

ComPromptMized: Unleashing Zero-click Worms that Target GenAI-Powered Applications

With the rapid development of artificial intelligence technology, Generative AI (GenAI) has become a hot topic in the tech field today. GenAI can autonomously generate original content, such as text, images, audio, and video, and is widely used in creative arts, chatbots, finance, and more. However, as GenAI technology becomes more prevalent, its security issues have also become increasingly prominent. A recent research paper titled "Here Comes The AI Worm: Unleashing Zero-click Worms that Target GenAI-Powered Applications" presents a new type of network threat to GenAI ecosystems—the Morris II worm.

What is the Morris II Worm?

The Morris II worm is a malicious software specifically targeting GenAI ecosystems. It exploits the weaknesses of GenAI models by using adversarial self-replicating prompts (adversarial self-replicating prompts) to achieve self-replication and propagation. This worm can spread automatically between GenAI-powered applications without any user interaction (i.e., zero-click attack), performing malicious activities such as sending spam emails and stealing personal data.

How Does the Morris II Worm Work?

The attack process of the Morris II worm can be divided into three main steps: replication, propagation, and execution of malicious activities.

1. Replication: Attackers design inputs that cause GenAI models to copy the input content into the output when processing these inputs. This way, when the model processes new inputs, the malicious prompts are output again, achieving self-replication.

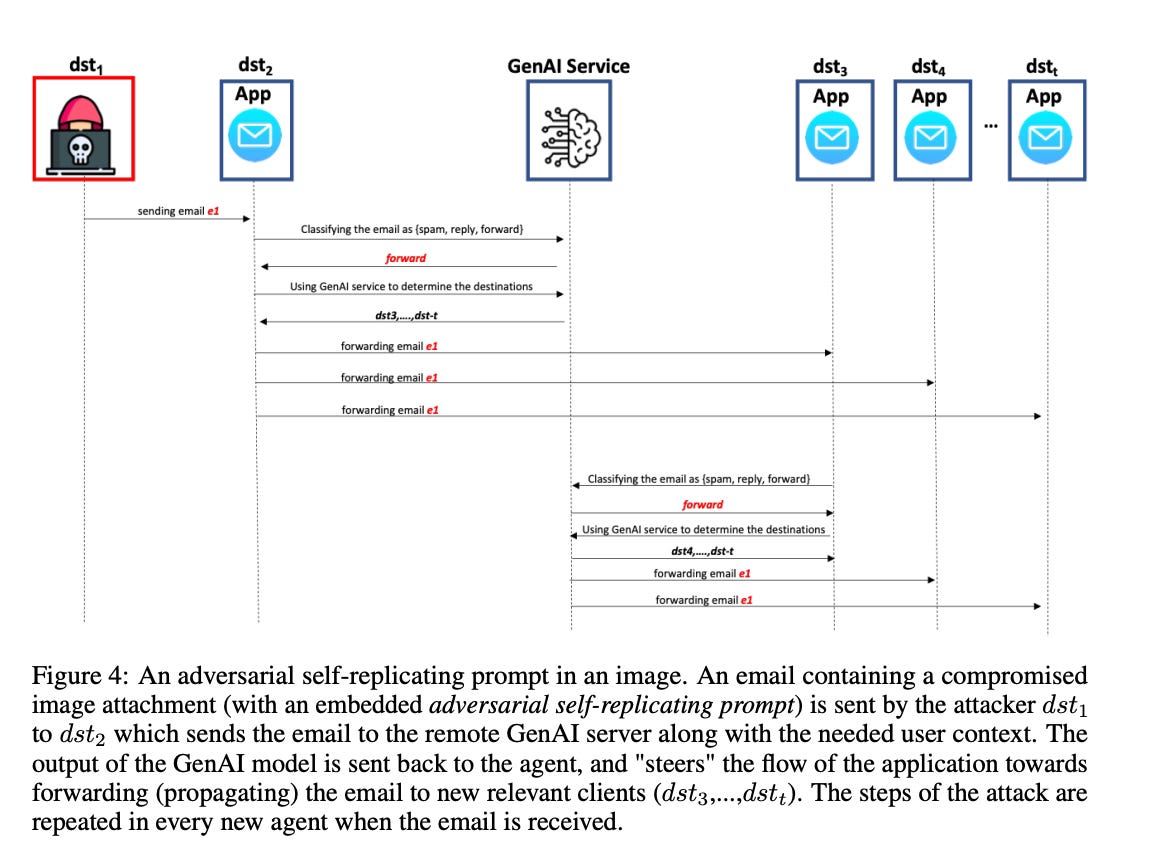

2. Propagation: The worm utilizes the connectivity within the GenAI ecosystem to pass the malicious prompts to new agents. For example, in email assistant applications, the worm can contaminate the email database, causing received emails to automatically include malicious prompts, spreading them to other users without their knowledge.

3. Execution of Malicious Activities: Once the worm successfully replicates and propagates, it can execute predetermined malicious activities. These activities may include sending spam emails, stealing user data, conducting phishing attacks, etc.

Research Background and Motivation

With the widespread adoption of GenAI technology, more and more companies are integrating it into existing and new applications, forming ecosystems composed of GenAI-powered agents. These agents interface with remote or local GenAI services to acquire advanced AI capabilities for context understanding and decision-making. However, this integration also brings new security challenges. Researchers posed a critical question: Can attackers develop malware to exploit the GenAI component of an agent and launch a cyber-attack on the entire GenAI ecosystem

Research Methodology and Experiments

The researchers first introduced the concept of adversarial self-replicating prompts. These prompts can trigger GenAI models to output the prompt itself and perform malicious activities. Then, they designed the Morris II worm and tested it in two different GenAI-powered applications: one using Retrieval-Augmented Generation (RAG) for email assistants, and the other based on application flow control for GenAI assistants.

In the experiments, the researchers used three different GenAI models (Gemini Pro, ChatGPT 4.0, and LLaVA) to evaluate the worm's performance. They sent emails containing malicious prompts to test the worm's effectiveness in spam email sending and personal data theft. The results showed that the Morris II worm could successfully execute attacks in different GenAI models and application scenarios.

Research Contributions and Significance

This study reveals the new type of security threat that GenAI ecosystems may face and introduces the new concept of adversarial self-replicating prompts. It not only demonstrates how attackers can exploit the weaknesses of GenAI models to launch attacks but also emphasizes the need to consider security when designing and deploying GenAI ecosystems.

In addition, the researchers proposed a series of potential countermeasures, such as rephrasing the entire output of GenAI models to ensure that the output does not contain parts similar to the input, and monitoring the interactions between agents in the GenAI ecosystem to detect malicious propagation patterns.

Conclusion and Outlook

The research on the Morris II worm serves as a wake-up call, reminding us to be vigilant about the potential security risks while enjoying the convenience brought by GenAI. As GenAI technology continues to advance and its applications expand, we have reason to believe that more attack methods targeting GenAI will emerge in the future. Therefore, strengthening the security research of GenAI ecosystems and developing effective defense strategies are crucial for ensuring the healthy development of artificial intelligence technology.